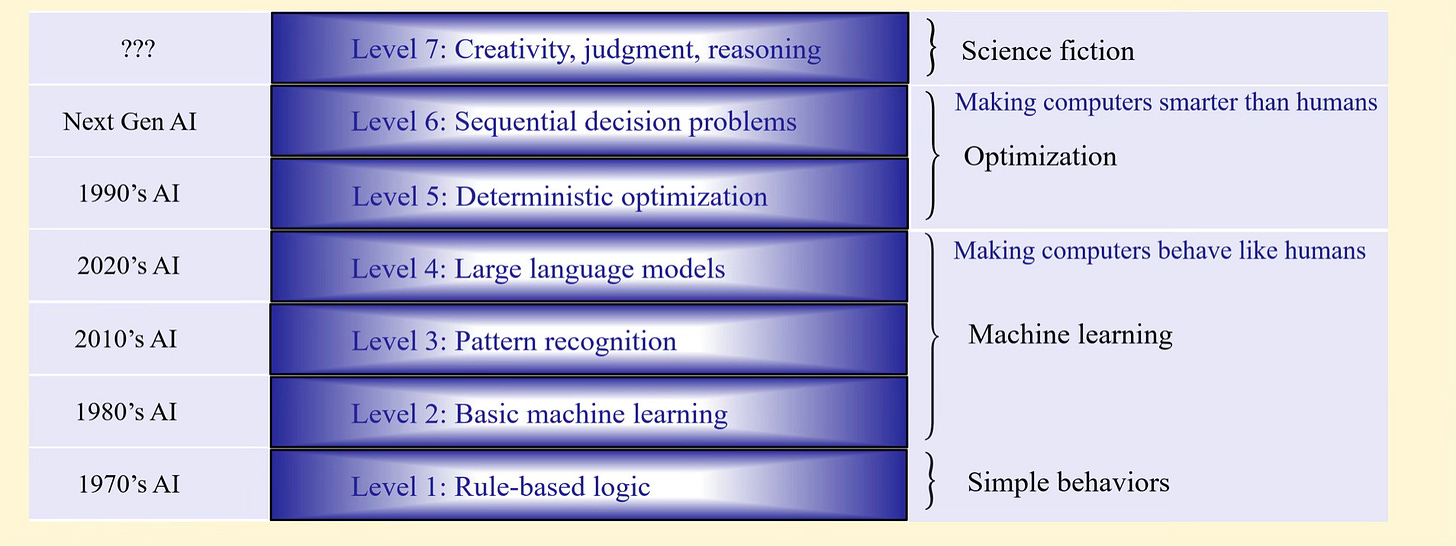

Graphic from “The 7 Levels of AI” by Warren B. Powell. Used with permission.

Electricity Use for A I

The “energy implications of data centers” is a subject above my pay grade. In contrast, if you were to ask me about the energy use of heat pumps, I could find solid ground to answer your question. The rules that control heat pumps are the immutable laws of thermodynamics.

But data centers…if there’s solid ground somewhere, I haven’t found it. What level of query? Google searches? ChatGPT? AI version 1, 2, 3, or 4? General AI? What type of LLM (Large Language Model) for training? How does Moore’s law fit in? Or doesn’t it?

The subject of “energy for AI” makes me wish for the clarity of the second law of thermodynamics. Heat pumps! I can understand heat pumps. I can’t provide clarity on AI, but I can still write about it. After all, that is what everybody does.

AI versus Search

AI versus search, oversimplified.

Search attempts to give you the information to answer a question. It evaluates the information in terms of relevance to your question. In contrast, chatbots are a form of A.I. They attempt to answer your question. “Search” is basically a keyword search, while A.I. is generally trained on a data set.

How much electricity does an AI query require?

Which query? Which version of AI?

Warren B. Powell wrote The Seven Levels of AI, published by Princeton in November 2024. In this paper, he sets ChatGPT at level 4 out of 7 levels. (Powell’s chart is at the head of this post.) ChatGPT requires machine learning and large language models (LLM).

Powell describes the highest level of AI, level 7, as “science fiction.” However, people describing their own AI tend to exaggerate its abilities. As Powell writes: “The large language models such as ChatGPT are described as performing at this level [level 7], but this is only because it mimics word patterns that are produced by people.”

Another issue is the scope of the AI. AI for limited and well-defined questions requires less computer power than more general AI. Level 7 (very general AI) is the stuff of science fiction. More limited AIs are here now.

How do we estimate AI energy requirements?

Many descriptions of AI include a statement such as “A.I. requires ten times the electricity as a Google search.” Really? Which level of AI? Which question?

There is no overall answer. Here are three examples of estimating the requirements for AI.

1) Stackpole, MIT: 2% of the world’s energy

Beth Stackpole wrote AI has high data center energy costs — but there are solutions. The article was published in January 2025 by the Sloan School of Business at MIT.

According to this article, AI already requires 2% of the world’s energy, and its use is continuing to grow. Shall we panic? No. Stackpole also notes that making a graphic with AI uses about the same amount of electricity as charging a smart phone. She also shows ways that AI energy use can be limited, and still get good results. For example, if you use a large data set to train AI, you can often stop the training after 20% of the data has been accessed, and still get much the same results.

In honor of Stackpole’s work, I decided to make my first graphic with AI. It is at the bottom of this post.

2) Pandreco, Energy IQ: Electricity will limit AI use

Pandreco posted Power from the People in his Energy IQ blog in March 2025. He clarifies the different aspects of AI that require energy, from chip making to training to answering queries. He bases his views on his own knowledge and on a detailed podcast in which Lex Fridman interviewed industry experts Dylan Patel and Nathan Lambert.

Overall, Pandreco expects AI to be limited by the availability of electricity, not by chips. He considers a kind of worst-case scenario in which every search that we do nowadays becomes a general AI query. (I think the queries that Pandreco describes are about level 5 or 6 in Powell’s schema.) Pandreco estimates that each query uses ten times the electricity of a simple search. If we do as many queries as we currently do searches, we will need to build more power plants.

3) Marcel Salathé, Engineering Prompts: Urban myths

Marcel Salathé is a professor at the Swiss Federal Institutes of Technology. Salathé kept seeing the “ten times as much electricity” statement for AI. He investigated the source. In January of 2025, he posted his conclusions on his Engineering Prompts blog: Does ChatGPT use 10x more energy than a standard Google search?

His answer is yes, ChatGPT does use ten times the electricity as a search. However, he found that both searches and AI use about one-tenth the electricity that they formerly used. Therefore, while AI uses more electricity than search, the situation is not as dire as it is usually described. He concludes that “…an average LLM request-response interaction using the LLaMA 65B model (with about 200 tokens total) consumes approximately 0.2 Wh.” That is not a lot of electricity. My conclusion is that you should use LEDs and stop worrying about your use of AI.

As Salathé describes the situation, most of the “ten times” quotes link back to a sort of urban myth. (“Urban myth” is my phrase, not used by Salathé.) John Hennessy, Alphabet’s chairman, was interviewed in 2023, and he estimated this “ten times” number. Nothing about his statement was well-defined. But people have been using this “ten times” statement ever since.

The bigger picture

There is no solid ground. Everything is evolving. AI is evolving. The questions are evolving. The amount of electricity which will be used for AI is evolving.

In my opinion, much of the hype about advanced AI is just that…hype. “Wow! Soon we will have a brave new world of robots that are smarter than us!”

I don’t think advanced AI is the future of AI. In contrast, modest present-day AI is very useful. Many authors know that AI chatbots can do good research for them, and save immense amounts of time. AI is also being used for complex but limited situations, such as managing the PJM Interconnection queue.

I think it will be small-scale AI that will change many aspects of our lives.

People still matter

If there’s an AI revolution, people will still be useful. You need someone with their head screwed on to evaluate the results of the AI. Unlike the results of a simple search engine, AI can have hallucinations. For example, AI can quote an article that doesn’t exist.

Our daughter led some informative research on AI and hallucinations. Rina Palta, Julia Angwin and Alondra Nelson investigated using AI to answer simple questions about voting. Their results were published as Seeking Reliable Election Information: Don’t Trust AI in Proof News in February 2024. 1 Their test questions were not complex issues such as “how will tariffs affect the American economy?” No, these were questions about when polls open, where polls are located, and what documentation you need in order to vote. AI frequently gave wrong answers.

AI is still evolving.

GIGO

Back in the old days, computer users said this about data: “Garbage in, garbage out.” Still true! And currently, only humans can identify garbage.

My conclusion is that AI is useful and will get even more useful in the future. But only humans can ask the right questions and identify reasonable answers.

ChatbotGPT of me in front of a nuclear plant. In the first version that the chatbot provided, I had too many wrinkles. I asked Chatbot to take them out. Just because I’m in my seventies doesn’t mean I want to look old.

This work was part of the AI Democracy Projects. These projects are a collaboration between Proof News™ and the Science, Technology, and Social Values Lab at the Institute for Advanced Study.

So you found a use for AI, Angwin?

As a 71-year-old outdoorsman, I'm grateful that wrinkles don't hurt…

Not a day over 35 - but the grey hair is a giveaway!

I have written a specialist book on hydropower. When I asked ChatGPT to find it, it did so along with two non-existent Coles Kuhtze and the news (to me) that I had revised it in 2019.

I can also confirm that it is consensus driven and would have rubbished Galileo and many others like him. Don't expect it to identify breakthrough science.